[mashshare]

Freelap USA: What types of jump testing do you utilize with your athlete populations?

Craig Turner and Nate Brookreson: A staple test utilized between our sports is the countermovement vertical jump assessment. This is performed on a dual force platform using the ForceDecks software (from Vald Performance) and is our primary go-to for jump assessment within our athlete groups. This form of jump has an abundance of supporting research demonstrating good validity and reliability for athlete populations. We utilize this test most frequently with our athletes, due to its ability to analyze different phases in the jump (i.e., eccentric and concentric phase, duration and forces produced). This allows for the identification of several key variables (discussed later) that help us understand both the movement strategy and the performance of maximal jumping efforts in a time-efficient manner (~1 minute per athlete).

We use the countermovement vertical jump assessment the most, as it analyzes different jump phases. Share on XMost recently we have begun to evaluate the force-velocity characteristics of our athletes in more detail. This method was inspired by the research of Jimenez-Reyes et al (2016). We utilize the countermovement jump test even further by performing additional CMJ repetitions (2 reps) at differing absolute loads (e.g., 20kg, 40kg, and 60kg for a male soccer player; 15kg, 25kg, and 40kg for a female soccer player). This allows us to evaluate the force-velocity relationship with increased external load (i.e., higher force output and lowered velocity).

The choice of absolute loads versus relative loads is due to the linear relationship of force-velocity that we see as these loads increase (see the work of JB Morin or Pierre Samozino for more detail on this topic). One rule is that the athlete must jump >4 inches for that load to be included within the analysis. We can then compare the athlete’s profile to a “predicted optimal profile” to assess which end of the force-velocity curve the athlete needs to work on to further improve jump height.

Differences in jump assessment tools also exist between our sports, which help us delve further into specific qualities that may be relevant for that specific sport. For example, with women’s basketball we assess an approach vertical jump, and a lateral bound and stick. Another example would be within soccer, where we utilize a broad jump and single leg broad jump, given the suggested associations with horizontal force production mechanisms and other athletic qualities such as acceleration. Such tests further represent qualities that are utilized within the sports themselves and provide more specificity to inform our training approach.

Freelap USA: How specifically does working with a wide range of jump types (such as seen in swimming athletes) impact how you view jumping in sport?

Craig Turner and Nate Brookreson: A close examination of the sports we work with currently would be all that’s necessary to formulate an approach to jump training. Therefore, a detailed needs analysis is performed within each of our sports to direct the decision-making process around what jumps are appropriate to monitor these athletes.

For example, notational analysis of basketball reveals that between 40 and 60 maximal jumps occur during competition. So, from the perspective of specific preparation, jump training is necessary and therefore should be formally assessed in the testing setting. Additionally, many injuries associated with women’s basketball, primarily of the ankle and knee variety, require the athlete to land optimally and effectively attenuate force.

When designing the testing battery for women’s basketball, we examine several jump strategies to determine the underlying physical qualities needed for success in the sport. The countermovement jump on the force plates allows us to reliably measure lower body explosive power, as well as determine the force-velocity relationship of the athletes. One-step vertical jump testing is an examination of the transfer of horizontal to vertical force, which is a characteristic of many discrete activities on the basketball court (such as rebounding from the perimeter, jump shooting off the dribble, helping defense, and a one-step layup). The lateral bound and stick looks at the generation of power of the frontal plane and reflects the ability to cover distance in shuffling actions, which make up around a quarter of the movement happening on the court. These jumps all represent unique, discrete actions that occur on the court and are reliable from a test-retest perspective.

While swimming is a sport that has unique physical and kinematic properties, testing still needs to be reliable and sensitive to change (explained below). The goal of any testing is to inform the athlete and coach about actual changes in performance. Therefore, we utilize squat and countermovement jump testing with our swim population.

The goal of any testing is to inform the athlete and coach about actual changes in performance. Share on XThe start and walls represent a greater relative proportion of the race at the collegiate level due to swimming in a short course yards (SCY) pool rather than the long course meters (LCM) events seen in the Olympics. Additionally, research has examined the relationship between countermovement jump variables and sprint performance, with several reporting a correlation between lower body explosive power in a CMJ and start performance (Carvahlo et al., 2017; Maglischo et al., 2003). While some argue that the kinetics of the start are more complex than a CM or squat jump (Benjanuvatra et al., 2007), CM and squat jumps have similar loading strategies and lower limb contribution characteristics. In the future, it would be beneficial to examine the transfer of jump monitoring to specific event performance, particularly in the collegiate SCY population.

Freelap USA: What daily, weekly, or monthly markers do you assess in your sports to measure progress?

Craig Turner and Nate Brookreson: A brief overview of some of the variables we utilize in our feedback approach are outlined below:

Daily/Weekly:

The CMJ test is typically utilized 1-2 times weekly within most of our sports. This test not only gives us a measure for jump performance improvement, but also a representation of athlete readiness (i.e., is the athletes performance declining or are movement strategies changing due to fatigue, etc.). Table 1 shows a breakdown of some of the key performance indicators and how we use them.

| Variable | How It’s Measured | How We Use It |

| Jump Height (cm/inches) | Estimated from flight time. | Provides insight into overall physical jump performance. Primary feedback mechanism for athletes (due to ease of understanding). |

| Relative Peak Power (N/kg) | Highest power achieved during the jump relative to body weight. | To assess overall power improvements, individualized to the athlete’s weight. Secondary feedback mechanism for athletes (to compare with peers). |

| Concentric Impulse (Ns) | Total force exerted in the concentric phase multiplied by time taken. | Provides information to assess the concentric phase of the jump and how this influences jump performance. |

| Force @ Zero Velocity (N) | Force exerted at concentric phase onset (i.e., velocity is at zero). | Lowered force output may represent diminished neuromuscular function (a means of monitoring fatigue). Targeted strength training may also look to improve force generating qualities, reflected in higher F@0 output. |

| Eccentric Duration (s) | Duration of the eccentric phase in seconds. | May represent a change in movement mechanics (i.e., a longer eccentric/ concentric phase), which may provide insights into fatigue and jump strategy utilized. |

| Concentric Duration (s) | Duration of the concentric phase in seconds. |

Table 1. A breakdown of some of the key performance indicators the countermovement jump test measures for, and how we use them.

Monthly/Seasonal:

We use our other jump-based tests more periodically as part of the sports testing battery to assess the outcomes of different time points during the collegiate-athlete calendar. Typically, we observe a pre-season start and pre-season end measure, as well as a mid-season and post-season measure (when appropriate). For certain athletes (i.e., those on specific development programs), these measures are used more routinely in relation to the periodized strategy (e.g., pre-mid-post six-week training block within off-season). Additionally, these measures are utilized routinely within our return to sport protocols when an athlete is progressing back from injury. These tests shape some of the athlete criteria needed to inform progressions to more speed- and conditioning-based (specific to the sport) tests.

We utilize the analytical approach described below to assess each athlete’s progressions within these specific tests. This approach is a framework to monitor our athletes.

Step 1: Familiarization

First, we look to understand the specific measurement within the population. When first testing, we look to conduct a series of repetitions (generally 3-6) for the given test. We then look to evaluate the athlete’s coefficient of variation (standard deviation/mean = CV%) for the given test measure. This gives us a quick representation of how that athlete varies within the test. We can also assess whether they are getting better at the test as a result of a “warm-up effect” (e.g., jump height increases with the number of repetitions completed), or if their strategy has large variations, potentially indicating a lack of familiarity or some external factors influencing the test (e.g., fatigue/motivation).

Generally speaking, it is typically the former with our athlete populations, as jump tests (such as the CMJ and broad jump) are commonly used in the training process and learning effects are typically small. In the case that we see a “warm-up effect” and the athlete keeps getting better across the repetitions and hits their highest jump on the final rep (within our performance testing battery we generally cap it at three repetitions due to time constraints), we then either:

1) Add reps until jump height plateaus; or

2) Look to modify this athlete’s warm-up strategy so that peak jump height is hit within the desired three-repetition range.

Step 2: Understanding Noise

Think of “noise” as the measurement error of the test. When things become noisier, it becomes increasingly difficult to hear what is going on. So, as the word implies, more noise = more error, and more error makes our lives more difficult when interpreting testing data—especially when we are attempting to judge if an athlete has improved or not (i.e., understanding a real change by separating that change from the test error). Therefore, it is important to try and understand what the “noise” of the test we are employing is. This means we need to understand where noise comes from and then quantify it.

A test’s measurement error is ‘noise.’ We need to understand where noise comes from and quantify it. Share on XOne way of understanding the test noise is to measure an athlete multiple times over a period of time when we don’t expect this athlete’s performance to change. For this, we typically use 4-6 testing occasions within the first 14 days of a training program (though others have suggested using more test occasions), as this is what is practical for us to complete. Then we calculate the within-subject standard deviation (this can be easily calculated in programs like Excel). This value would represent the noise.

We may not always get the opportunity to perform multiple tests, so therefore we also have another way of calculating the test noise. Here we look to conduct a “reliability” type study with our athletes. Ideally, you want to conduct the reliability study over the time length that you’re going to be utilizing, though this may not always be feasible for tests performed several weeks apart. Therefore, we typically conduct two tests separated by a period of seven days (conducted the same time of day within the training week following an off day).

We use such a selection because no major performance improvements should be shown within this period and other influences (e.g., fatigue) should be limited due to the preceding off day. This allows us to begin to understand potential “noise” associated with the test metrics. This begins to examine the reliability associated with these measures, which becomes a useful source of information for our further monitoring in subsequent tests.

Example:

We conducted a baseline jump test (CMJ) at the start of the pre-season with a group of athletes utilizing a force plate. We then conducted the same test seven days later following a day off. From this data, we can calculate the jump height and then the difference between these two test scores. In a perfect world, there would be no difference between these tests as athletes shouldn’t have had any performance improvements.

However, when it comes to athlete testing, perfect is a rare commodity. Unfortunately, there will likely be variation in the measurement. This typically comes from two sources:

1) Technical error of the measurement device (i.e., the calculations used to estimate jump height from the force plate and software).

2) Biological variation induced by the athlete (causing natural day-to-day fluctuations in the measurement).

Luckily, we can use programs like Microsoft Excel and helpful statistical spreadsheets to calculate the amount of this variation (see Will Hopkins’ Sportsci.org for resources). Simply, we can calculate the difference score for each athlete (Day 2 minus Day 1), then the standard deviation (SD) of these difference scores (known as the between-subject SD of differences), then divide this number by the square root of 2 (as we have two testing days). This calculation then provides us with our “standard error of measurement,” which can also be referred to as “typical error” (i.e., the typical error we would likely expect from the test).

Equation 1: Typical error = standard deviation of difference scores / √(2)

Within our example for jump height, we calculated this error as approximately 1 cm (0.96 rounded up) in raw units. So, if one of the athlete improves by 0.5 cm (a value that is less than the test noise), we are less confident that a real improvement has occurred. This is because of the measurement error. This allows us to accept the uncertainty around some of our measures and it can be performed on any of the variables collected. Simply put, not every performance change is a real change.

Step 3: Understanding a Practical Important Change

Within all our tests, what we are really interested in is the smallest improvement that influences performance. There are two main methods that we can utilize to calculate thresholds that represent this practically important change. One method is referred to as the “minimal clinically important difference.” For this, we can typically look at the research and find when our measure has been used to predict performance outcomes and utilize this value or conduct our own analysis.

However, for most of our tests we have looked to use a method based on the Cohen’s effect size principle. For this, we look at how our athletes’ performances vary compared to one another (between subject SD) and apply a factor to determine the change required to move their position within the distribution. For the most part, we utilize testing batteries that are physical surrogates to actual competitive performance. Therefore, we use the following equation to calculate our smallest worthwhile change (SWC):

Equation 2: SWC = between subject standard deviation * 0.2

(Where the between subject SD is taken from the baseline scores of all the athletes from Test Day 1.)

The SWC can often be thought of as the “signal” within our test (the smallest practical change). In an ideal world, this signal would be greater than our test noise. However, in physical performance tests the noise is generally greater than the signal. This doesn’t necessarily mean that the test isn’t useable (though if the noise is four times greater than SWC, you should perhaps consider finding a better test).

For our earlier example of jump height, we had a noise of 1 cm. Using the above smallest worthwhile change equation on Test Day 1, we calculated the SWC as 0.68 cm. You quickly realize that the SWC < NOISE (0.68 cm < 1 cm). As these values are pretty close (greater than ½ rhw noise), we can still use this test.

Luckily, there are resources out there that can help us interpret these changes. A very simple rule of thumb is the signal + noise = greater likelihood the change is meaningful. Additionally, if we want to calculate higher boundaries (e.g., a moderate or large change), we can multiply the standard deviation by a higher value (0.6 for moderate, 1.2 for large).

Step 4: Analysis and Feedback

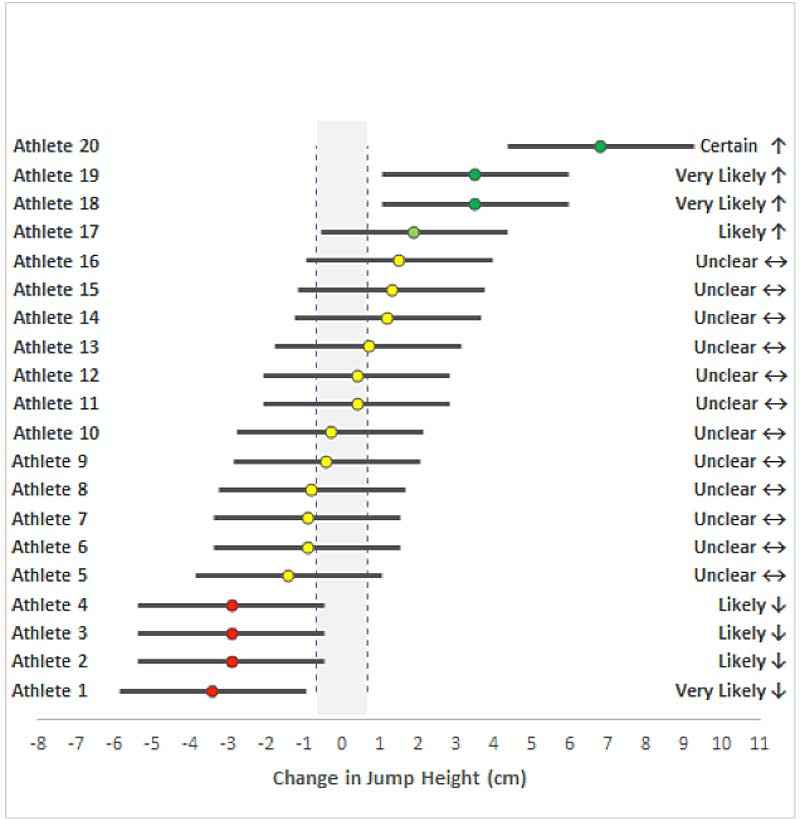

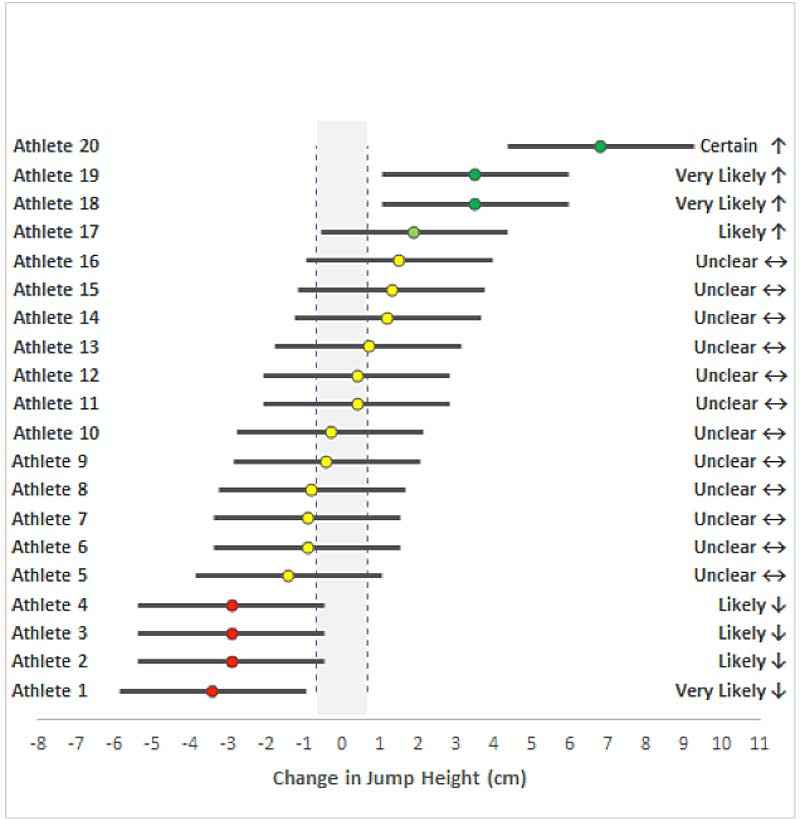

Now that we have our values of test noise and signal, we are in a position to monitor and analyze our data efficiently, enabling us to make fast decisions within our feedback approaches to athletes and coaches. Figure 2 below is a visual for taking our jump height example above and applying it to 20 female soccer athletes tested at the beginning and end of pre-season.

Deconstructing Figure 2, we have a grey shaded area that represents our SWC (0.68 cm). We have each athlete’s value and we have a range of true values that each of the athletes could have performed, known as the confidence interval (black shaded bars). The confidence interval was calculated using the Excel TINV function. For a 90% CI, the equation was as follows:

Equation: = TINV(0.1,16) *(sqrt(2)*1) = 2.47 cm

(0.1 represents 90% probability; 16 = degrees of freedom; sqrt(2)*1 is the adjusted typical error)

We can now start to provide a magnitude-based inference around our data utilizing the work of Hopkins et al. We see Athletes 1-4 have a reduced jump height (red dots), suggesting a negative response to pre-season. Athletes 5-16 were “unclear” (yellow dots). As their boundary of true values crosses both the increased and reduced sides of change, we cannot be sure “statistically” whether these athletes have changed. Athletes 17-20 increased (green dots), suggesting an improvement following the pre-season training period.

From the analytical approach outlined above, we now have a method that informs our feedback of physical performance to our athletes and coaches. Additionally, we now have a means to communicate what small changes are required to improve performance.

Freelap USA: In the process of “getting athletes stronger” what key factors are you really looking for?

Craig Turner and Nate Brookreson: The foremost criteria within the process of getting an athlete stronger is whether the athlete moves effectively within the movement pattern. This is primarily informed through our movement assessment procedures (discussed within the section below). When we are satisfied the athlete “moves well,” then we are more comfortable loading that pattern in an attempt to optimize characteristics such as force and velocity.

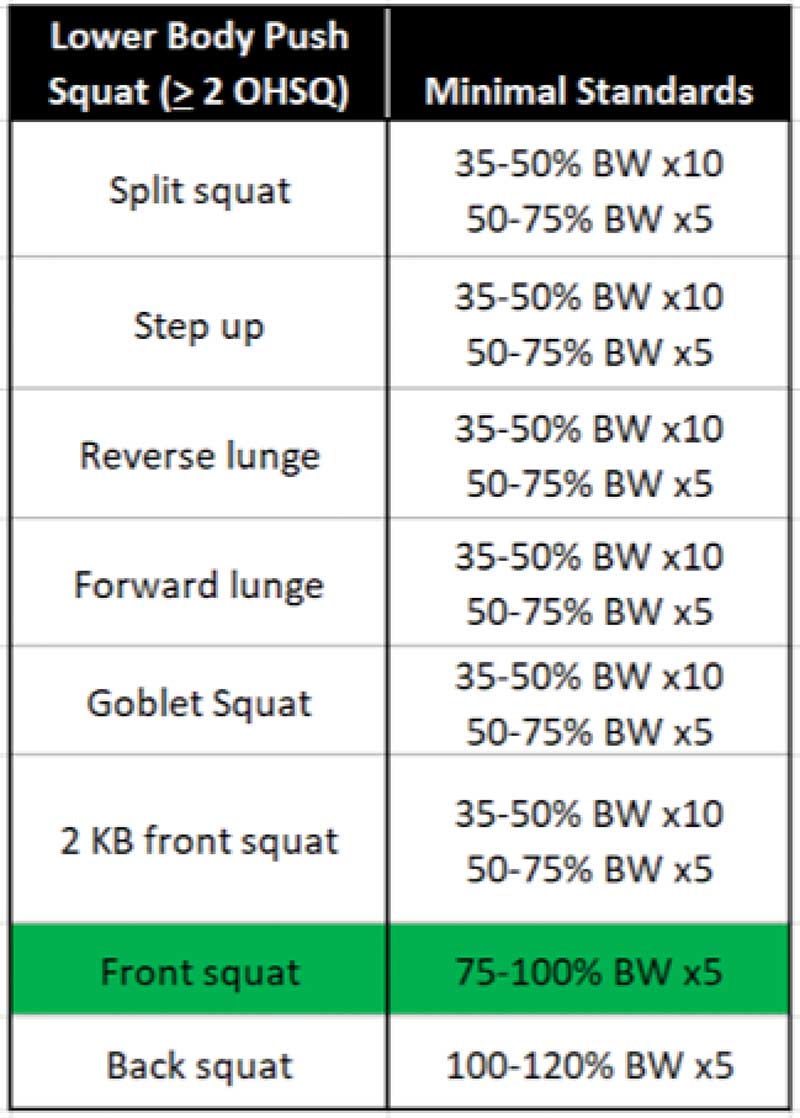

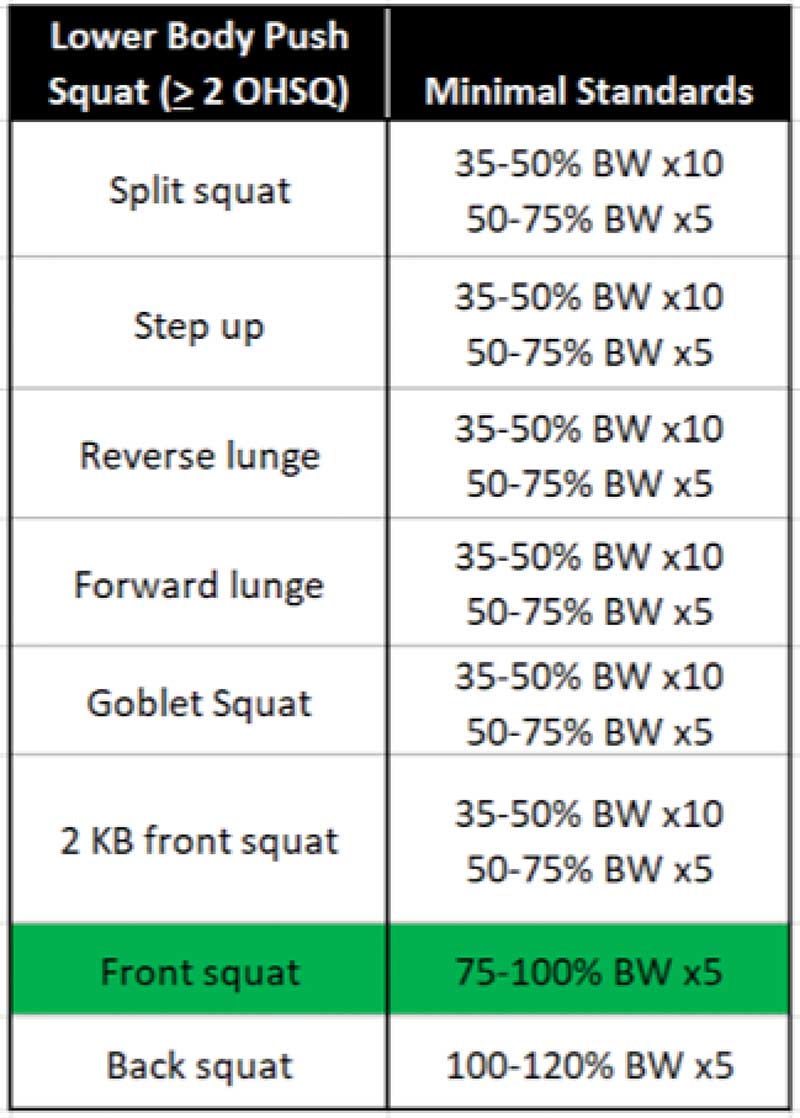

We won’t load a movement pattern until we are satisfied the athlete ‘moves well’ within it. Share on XFrom a programming perspective, we have developed a graded exercise progression chart where an athlete must achieve minimum demonstrable standards before progressing to more advanced programming methods. An example of this is shown in Figure 3.

This provides us with a structure of operational standards to guide athlete progressions. This also allows us to communicate to the athlete and help them understand the programming journey across their collegiate career.

Lastly, the sport in which the athlete participates is also a guiding factor to the “getting stronger” notion. There is likely a point of diminishing returns for some of our sports when it comes to strength training (e.g., track sprinters, for whom the improvement of velocity qualities is the primary factor). Therefore, an appraisal of the sport is also highly important as we look to improve strength qualities without inhibiting the sport’s primary factors.

In addition, the aforementioned jump testing (and other testing protocols such as force velocity profiling within MySprint) also serves as another source of information to help guide the direction of programming for the individual athlete. For example, some athletes may benefit from developing force qualities (e.g., low eccentric and concentric average force in jump). Others may lack qualities such as high peak velocities at takeoff or low concentric peak velocity, which may therefore require more velocity-based exercise selection. Therefore, an appropriate appraisal of these qualities, in addition to the sport, helps serve as a guide to the direction of programming.

Freelap USA: What is your approach in terms of movement and mobility screening and assessment in athletes?

Craig Turner and Nate Brookreson: Assessment is a crucial part of the performance process in order to evaluate current physiological capabilities, identify limiters to resiliency, and serve as a prerequisite to the creation of the training plan. An assessment is intended to be convenient, quick, and actionable, but in the collegiate environment, the most important element of the assessment is that multiple practitioners—namely the S&C and sport medicine staff—rate and quantify the assessment similarly. This allows both professionals to utilize the assessment as an audit when an intervention is applied in training or rehabilitation.

In the collegiate setting, it’s vital that S&C and sport medicine staff rate assessments similarly. Share on XIn our environment, we tend to screen first for pain, then fundamental mobility of the ankle in a closed-chain position, a supine leg-raising, and reciprocal upper extremity reaching pattern; motor control in a high-threshold stabilization pattern; and finally, a functional pattern representing a commonly seen athletic position in sport. Additionally, we utilize the anterior reach of the Y-Balance Lower Quarter assessment and the superolateral reach of the Y-Balance Upper Quarter assessment. The rationale for each assessment is listed in Table 2.

| Assessment | Rationale |

| Closed chain dorsiflexion | Evaluation of ankle mobility; looking for 35-45° with <4° difference with no pain or pinching in front of ankle. |

| FABER/FADIR | First screen of hip mobility; FABER = parallel to ground, FADIR = 35° with no pain or pinching in the front of the hip. |

| FMS active straight-leg raise | Fundamental mobility during a leg-raising pattern; necessary for hip hinging. |

| FMS shoulder mobility | Fundamental mobility of shoulder in reciprocal reaching; necessary for overhead pressing. |

| FMS overhead squat | Functional pattern demonstrating optimal positioning for athletic maneuvers. |

| YBT-LQ anterior reach | Assessment of dynamic postural control; reach >70% leg length with <4 cm asymmetry. |

| YBT-UQ superolateral reach | Identify upper extremity and trunk mobility in the open kinetic chain in the reaching limb as well as mid-range limitations and asymmetries of upper extremity and core stability in the closed kinetic chain in the stabilizing arm; reach >70% arm length with <4 cm asymmetry. |

Table 2. Assessment is a crucial part of the performance process in order to evaluate current physiological capabilities, identify limiters to resiliency, and serve as a prerequisite to the creation of the training plan. This is an overview of our assessment process.

Since you’re here…

…we have a small favor to ask. More people are reading SimpliFaster than ever, and each week we bring you compelling content from coaches, sport scientists, and physiotherapists who are devoted to building better athletes. Please take a moment to share the articles on social media, engage the authors with questions and comments below, and link to articles when appropriate if you have a blog or participate on forums of related topics. — SF

[mashshare]

Nate Brookreson was named Director of Strength & Conditioning for Olympic Sports at NC State in June 2015. His primary training duties are with women’s basketball, swimming, and diving, and men and women’s golf, while supervising the implementation of training for over 500 student-athletes.

Nate Brookreson was named Director of Strength & Conditioning for Olympic Sports at NC State in June 2015. His primary training duties are with women’s basketball, swimming, and diving, and men and women’s golf, while supervising the implementation of training for over 500 student-athletes.

Prior to NC State, Brookreson was the Director of Athletic Performance for Olympic Sports at the University of Memphis from August 2013 until June 2015. While at Memphis, Brookreson worked primarily with men and women’s soccer, men and women’s golf, and track & field sprinters, while supervising training for the Olympic sports department. Before heading to Memphis, Brookreson was the Director of Athletic Performance at Eastern Washington University from October 2010 until August 2013, working primarily with football and volleyball while supervising the training of all EWU student-athletes. He also served as an assistant at the same institution from August 2008 until his appointment to director. Additionally, he served as a student strength and conditioning coach at the University of Georgia, assisting with baseball, softball, swimming and diving, and women’s tennis.

A native of Lacey, Washington, Brookreson earned his bachelor’s degree from Central Washington University and master’s degree from Eastern Washington University in exercise science. He is certified through the National Strength and Conditioning Association (CSCS) and the Collegiate Strength and Conditioning Coaches Association (SCCC).

Nate is married to Kelsey Brookreson and has two sons, Blaise and Brock.

Jump Testing, Strength Standards, and Assessment for University Athletes

[mashshare]

Freelap USA: What types of jump testing do you utilize with your athlete populations?

Craig Turner and Nate Brookreson: A staple test utilized between our sports is the countermovement vertical jump assessment. This is performed on a dual force platform using the ForceDecks software (from Vald Performance) and is our primary go-to for jump assessment within our athlete groups. This form of jump has an abundance of supporting research demonstrating good validity and reliability for athlete populations. We utilize this test most frequently with our athletes, due to its ability to analyze different phases in the jump (i.e., eccentric and concentric phase, duration and forces produced). This allows for the identification of several key variables (discussed later) that help us understand both the movement strategy and the performance of maximal jumping efforts in a time-efficient manner (~1 minute per athlete).

We use the countermovement vertical jump assessment the most, as it analyzes different jump phases. Share on XMost recently we have begun to evaluate the force-velocity characteristics of our athletes in more detail. This method was inspired by the research of Jimenez-Reyes et al (2016). We utilize the countermovement jump test even further by performing additional CMJ repetitions (2 reps) at differing absolute loads (e.g., 20kg, 40kg, and 60kg for a male soccer player; 15kg, 25kg, and 40kg for a female soccer player). This allows us to evaluate the force-velocity relationship with increased external load (i.e., higher force output and lowered velocity).

The choice of absolute loads versus relative loads is due to the linear relationship of force-velocity that we see as these loads increase (see the work of JB Morin or Pierre Samozino for more detail on this topic). One rule is that the athlete must jump >4 inches for that load to be included within the analysis. We can then compare the athlete’s profile to a “predicted optimal profile” to assess which end of the force-velocity curve the athlete needs to work on to further improve jump height.

Differences in jump assessment tools also exist between our sports, which help us delve further into specific qualities that may be relevant for that specific sport. For example, with women’s basketball we assess an approach vertical jump, and a lateral bound and stick. Another example would be within soccer, where we utilize a broad jump and single leg broad jump, given the suggested associations with horizontal force production mechanisms and other athletic qualities such as acceleration. Such tests further represent qualities that are utilized within the sports themselves and provide more specificity to inform our training approach.

Freelap USA: How specifically does working with a wide range of jump types (such as seen in swimming athletes) impact how you view jumping in sport?

Craig Turner and Nate Brookreson: A close examination of the sports we work with currently would be all that’s necessary to formulate an approach to jump training. Therefore, a detailed needs analysis is performed within each of our sports to direct the decision-making process around what jumps are appropriate to monitor these athletes.

For example, notational analysis of basketball reveals that between 40 and 60 maximal jumps occur during competition. So, from the perspective of specific preparation, jump training is necessary and therefore should be formally assessed in the testing setting. Additionally, many injuries associated with women’s basketball, primarily of the ankle and knee variety, require the athlete to land optimally and effectively attenuate force.

When designing the testing battery for women’s basketball, we examine several jump strategies to determine the underlying physical qualities needed for success in the sport. The countermovement jump on the force plates allows us to reliably measure lower body explosive power, as well as determine the force-velocity relationship of the athletes. One-step vertical jump testing is an examination of the transfer of horizontal to vertical force, which is a characteristic of many discrete activities on the basketball court (such as rebounding from the perimeter, jump shooting off the dribble, helping defense, and a one-step layup). The lateral bound and stick looks at the generation of power of the frontal plane and reflects the ability to cover distance in shuffling actions, which make up around a quarter of the movement happening on the court. These jumps all represent unique, discrete actions that occur on the court and are reliable from a test-retest perspective.

While swimming is a sport that has unique physical and kinematic properties, testing still needs to be reliable and sensitive to change (explained below). The goal of any testing is to inform the athlete and coach about actual changes in performance. Therefore, we utilize squat and countermovement jump testing with our swim population.

The goal of any testing is to inform the athlete and coach about actual changes in performance. Share on XThe start and walls represent a greater relative proportion of the race at the collegiate level due to swimming in a short course yards (SCY) pool rather than the long course meters (LCM) events seen in the Olympics. Additionally, research has examined the relationship between countermovement jump variables and sprint performance, with several reporting a correlation between lower body explosive power in a CMJ and start performance (Carvahlo et al., 2017; Maglischo et al., 2003). While some argue that the kinetics of the start are more complex than a CM or squat jump (Benjanuvatra et al., 2007), CM and squat jumps have similar loading strategies and lower limb contribution characteristics. In the future, it would be beneficial to examine the transfer of jump monitoring to specific event performance, particularly in the collegiate SCY population.

Freelap USA: What daily, weekly, or monthly markers do you assess in your sports to measure progress?

Craig Turner and Nate Brookreson: A brief overview of some of the variables we utilize in our feedback approach are outlined below:

Daily/Weekly:

The CMJ test is typically utilized 1-2 times weekly within most of our sports. This test not only gives us a measure for jump performance improvement, but also a representation of athlete readiness (i.e., is the athletes performance declining or are movement strategies changing due to fatigue, etc.). Table 1 shows a breakdown of some of the key performance indicators and how we use them.

| Variable | How It’s Measured | How We Use It |

| Jump Height (cm/inches) | Estimated from flight time. | Provides insight into overall physical jump performance. Primary feedback mechanism for athletes (due to ease of understanding). |

| Relative Peak Power (N/kg) | Highest power achieved during the jump relative to body weight. | To assess overall power improvements, individualized to the athlete’s weight. Secondary feedback mechanism for athletes (to compare with peers). |

| Concentric Impulse (Ns) | Total force exerted in the concentric phase multiplied by time taken. | Provides information to assess the concentric phase of the jump and how this influences jump performance. |

| Force @ Zero Velocity (N) | Force exerted at concentric phase onset (i.e., velocity is at zero). | Lowered force output may represent diminished neuromuscular function (a means of monitoring fatigue). Targeted strength training may also look to improve force generating qualities, reflected in higher F@0 output. |

| Eccentric Duration (s) | Duration of the eccentric phase in seconds. | May represent a change in movement mechanics (i.e., a longer eccentric/ concentric phase), which may provide insights into fatigue and jump strategy utilized. |

| Concentric Duration (s) | Duration of the concentric phase in seconds. |

Table 1. A breakdown of some of the key performance indicators the countermovement jump test measures for, and how we use them.

Monthly/Seasonal:

We use our other jump-based tests more periodically as part of the sports testing battery to assess the outcomes of different time points during the collegiate-athlete calendar. Typically, we observe a pre-season start and pre-season end measure, as well as a mid-season and post-season measure (when appropriate). For certain athletes (i.e., those on specific development programs), these measures are used more routinely in relation to the periodized strategy (e.g., pre-mid-post six-week training block within off-season). Additionally, these measures are utilized routinely within our return to sport protocols when an athlete is progressing back from injury. These tests shape some of the athlete criteria needed to inform progressions to more speed- and conditioning-based (specific to the sport) tests.

We utilize the analytical approach described below to assess each athlete’s progressions within these specific tests. This approach is a framework to monitor our athletes.

Step 1: Familiarization

First, we look to understand the specific measurement within the population. When first testing, we look to conduct a series of repetitions (generally 3-6) for the given test. We then look to evaluate the athlete’s coefficient of variation (standard deviation/mean = CV%) for the given test measure. This gives us a quick representation of how that athlete varies within the test. We can also assess whether they are getting better at the test as a result of a “warm-up effect” (e.g., jump height increases with the number of repetitions completed), or if their strategy has large variations, potentially indicating a lack of familiarity or some external factors influencing the test (e.g., fatigue/motivation).

Generally speaking, it is typically the former with our athlete populations, as jump tests (such as the CMJ and broad jump) are commonly used in the training process and learning effects are typically small. In the case that we see a “warm-up effect” and the athlete keeps getting better across the repetitions and hits their highest jump on the final rep (within our performance testing battery we generally cap it at three repetitions due to time constraints), we then either:

1) Add reps until jump height plateaus; or

2) Look to modify this athlete’s warm-up strategy so that peak jump height is hit within the desired three-repetition range.

Step 2: Understanding Noise

Think of “noise” as the measurement error of the test. When things become noisier, it becomes increasingly difficult to hear what is going on. So, as the word implies, more noise = more error, and more error makes our lives more difficult when interpreting testing data—especially when we are attempting to judge if an athlete has improved or not (i.e., understanding a real change by separating that change from the test error). Therefore, it is important to try and understand what the “noise” of the test we are employing is. This means we need to understand where noise comes from and then quantify it.

A test’s measurement error is ‘noise.’ We need to understand where noise comes from and quantify it. Share on XOne way of understanding the test noise is to measure an athlete multiple times over a period of time when we don’t expect this athlete’s performance to change. For this, we typically use 4-6 testing occasions within the first 14 days of a training program (though others have suggested using more test occasions), as this is what is practical for us to complete. Then we calculate the within-subject standard deviation (this can be easily calculated in programs like Excel). This value would represent the noise.

We may not always get the opportunity to perform multiple tests, so therefore we also have another way of calculating the test noise. Here we look to conduct a “reliability” type study with our athletes. Ideally, you want to conduct the reliability study over the time length that you’re going to be utilizing, though this may not always be feasible for tests performed several weeks apart. Therefore, we typically conduct two tests separated by a period of seven days (conducted the same time of day within the training week following an off day).

We use such a selection because no major performance improvements should be shown within this period and other influences (e.g., fatigue) should be limited due to the preceding off day. This allows us to begin to understand potential “noise” associated with the test metrics. This begins to examine the reliability associated with these measures, which becomes a useful source of information for our further monitoring in subsequent tests.

Example:

We conducted a baseline jump test (CMJ) at the start of the pre-season with a group of athletes utilizing a force plate. We then conducted the same test seven days later following a day off. From this data, we can calculate the jump height and then the difference between these two test scores. In a perfect world, there would be no difference between these tests as athletes shouldn’t have had any performance improvements.

However, when it comes to athlete testing, perfect is a rare commodity. Unfortunately, there will likely be variation in the measurement. This typically comes from two sources:

1) Technical error of the measurement device (i.e., the calculations used to estimate jump height from the force plate and software).

2) Biological variation induced by the athlete (causing natural day-to-day fluctuations in the measurement).

Luckily, we can use programs like Microsoft Excel and helpful statistical spreadsheets to calculate the amount of this variation (see Will Hopkins’ Sportsci.org for resources). Simply, we can calculate the difference score for each athlete (Day 2 minus Day 1), then the standard deviation (SD) of these difference scores (known as the between-subject SD of differences), then divide this number by the square root of 2 (as we have two testing days). This calculation then provides us with our “standard error of measurement,” which can also be referred to as “typical error” (i.e., the typical error we would likely expect from the test).

Equation 1: Typical error = standard deviation of difference scores / √(2)

Within our example for jump height, we calculated this error as approximately 1 cm (0.96 rounded up) in raw units. So, if one of the athlete improves by 0.5 cm (a value that is less than the test noise), we are less confident that a real improvement has occurred. This is because of the measurement error. This allows us to accept the uncertainty around some of our measures and it can be performed on any of the variables collected. Simply put, not every performance change is a real change.

Step 3: Understanding a Practical Important Change

Within all our tests, what we are really interested in is the smallest improvement that influences performance. There are two main methods that we can utilize to calculate thresholds that represent this practically important change. One method is referred to as the “minimal clinically important difference.” For this, we can typically look at the research and find when our measure has been used to predict performance outcomes and utilize this value or conduct our own analysis.

However, for most of our tests we have looked to use a method based on the Cohen’s effect size principle. For this, we look at how our athletes’ performances vary compared to one another (between subject SD) and apply a factor to determine the change required to move their position within the distribution. For the most part, we utilize testing batteries that are physical surrogates to actual competitive performance. Therefore, we use the following equation to calculate our smallest worthwhile change (SWC):

Equation 2: SWC = between subject standard deviation * 0.2

(Where the between subject SD is taken from the baseline scores of all the athletes from Test Day 1.)

The SWC can often be thought of as the “signal” within our test (the smallest practical change). In an ideal world, this signal would be greater than our test noise. However, in physical performance tests the noise is generally greater than the signal. This doesn’t necessarily mean that the test isn’t useable (though if the noise is four times greater than SWC, you should perhaps consider finding a better test).

For our earlier example of jump height, we had a noise of 1 cm. Using the above smallest worthwhile change equation on Test Day 1, we calculated the SWC as 0.68 cm. You quickly realize that the SWC < NOISE (0.68 cm < 1 cm). As these values are pretty close (greater than ½ rhw noise), we can still use this test.

Luckily, there are resources out there that can help us interpret these changes. A very simple rule of thumb is the signal + noise = greater likelihood the change is meaningful. Additionally, if we want to calculate higher boundaries (e.g., a moderate or large change), we can multiply the standard deviation by a higher value (0.6 for moderate, 1.2 for large).

Step 4: Analysis and Feedback

Now that we have our values of test noise and signal, we are in a position to monitor and analyze our data efficiently, enabling us to make fast decisions within our feedback approaches to athletes and coaches. Figure 2 below is a visual for taking our jump height example above and applying it to 20 female soccer athletes tested at the beginning and end of pre-season.

Deconstructing Figure 2, we have a grey shaded area that represents our SWC (0.68 cm). We have each athlete’s value and we have a range of true values that each of the athletes could have performed, known as the confidence interval (black shaded bars). The confidence interval was calculated using the Excel TINV function. For a 90% CI, the equation was as follows:

Equation: = TINV(0.1,16) *(sqrt(2)*1) = 2.47 cm

(0.1 represents 90% probability; 16 = degrees of freedom; sqrt(2)*1 is the adjusted typical error)

We can now start to provide a magnitude-based inference around our data utilizing the work of Hopkins et al. We see Athletes 1-4 have a reduced jump height (red dots), suggesting a negative response to pre-season. Athletes 5-16 were “unclear” (yellow dots). As their boundary of true values crosses both the increased and reduced sides of change, we cannot be sure “statistically” whether these athletes have changed. Athletes 17-20 increased (green dots), suggesting an improvement following the pre-season training period.

From the analytical approach outlined above, we now have a method that informs our feedback of physical performance to our athletes and coaches. Additionally, we now have a means to communicate what small changes are required to improve performance.

Freelap USA: In the process of “getting athletes stronger” what key factors are you really looking for?

Craig Turner and Nate Brookreson: The foremost criteria within the process of getting an athlete stronger is whether the athlete moves effectively within the movement pattern. This is primarily informed through our movement assessment procedures (discussed within the section below). When we are satisfied the athlete “moves well,” then we are more comfortable loading that pattern in an attempt to optimize characteristics such as force and velocity.

We won’t load a movement pattern until we are satisfied the athlete ‘moves well’ within it. Share on XFrom a programming perspective, we have developed a graded exercise progression chart where an athlete must achieve minimum demonstrable standards before progressing to more advanced programming methods. An example of this is shown in Figure 3.

This provides us with a structure of operational standards to guide athlete progressions. This also allows us to communicate to the athlete and help them understand the programming journey across their collegiate career.

Lastly, the sport in which the athlete participates is also a guiding factor to the “getting stronger” notion. There is likely a point of diminishing returns for some of our sports when it comes to strength training (e.g., track sprinters, for whom the improvement of velocity qualities is the primary factor). Therefore, an appraisal of the sport is also highly important as we look to improve strength qualities without inhibiting the sport’s primary factors.

In addition, the aforementioned jump testing (and other testing protocols such as force velocity profiling within MySprint) also serves as another source of information to help guide the direction of programming for the individual athlete. For example, some athletes may benefit from developing force qualities (e.g., low eccentric and concentric average force in jump). Others may lack qualities such as high peak velocities at takeoff or low concentric peak velocity, which may therefore require more velocity-based exercise selection. Therefore, an appropriate appraisal of these qualities, in addition to the sport, helps serve as a guide to the direction of programming.

Freelap USA: What is your approach in terms of movement and mobility screening and assessment in athletes?

Craig Turner and Nate Brookreson: Assessment is a crucial part of the performance process in order to evaluate current physiological capabilities, identify limiters to resiliency, and serve as a prerequisite to the creation of the training plan. An assessment is intended to be convenient, quick, and actionable, but in the collegiate environment, the most important element of the assessment is that multiple practitioners—namely the S&C and sport medicine staff—rate and quantify the assessment similarly. This allows both professionals to utilize the assessment as an audit when an intervention is applied in training or rehabilitation.

In the collegiate setting, it’s vital that S&C and sport medicine staff rate assessments similarly. Share on XIn our environment, we tend to screen first for pain, then fundamental mobility of the ankle in a closed-chain position, a supine leg-raising, and reciprocal upper extremity reaching pattern; motor control in a high-threshold stabilization pattern; and finally, a functional pattern representing a commonly seen athletic position in sport. Additionally, we utilize the anterior reach of the Y-Balance Lower Quarter assessment and the superolateral reach of the Y-Balance Upper Quarter assessment. The rationale for each assessment is listed in Table 2.

| Assessment | Rationale |

| Closed chain dorsiflexion | Evaluation of ankle mobility; looking for 35-45° with <4° difference with no pain or pinching in front of ankle. |

| FABER/FADIR | First screen of hip mobility; FABER = parallel to ground, FADIR = 35° with no pain or pinching in the front of the hip. |

| FMS active straight-leg raise | Fundamental mobility during a leg-raising pattern; necessary for hip hinging. |

| FMS shoulder mobility | Fundamental mobility of shoulder in reciprocal reaching; necessary for overhead pressing. |

| FMS overhead squat | Functional pattern demonstrating optimal positioning for athletic maneuvers. |

| YBT-LQ anterior reach | Assessment of dynamic postural control; reach >70% leg length with <4 cm asymmetry. |

| YBT-UQ superolateral reach | Identify upper extremity and trunk mobility in the open kinetic chain in the reaching limb as well as mid-range limitations and asymmetries of upper extremity and core stability in the closed kinetic chain in the stabilizing arm; reach >70% arm length with <4 cm asymmetry. |

Table 2. Assessment is a crucial part of the performance process in order to evaluate current physiological capabilities, identify limiters to resiliency, and serve as a prerequisite to the creation of the training plan. This is an overview of our assessment process.

Since you’re here…

…we have a small favor to ask. More people are reading SimpliFaster than ever, and each week we bring you compelling content from coaches, sport scientists, and physiotherapists who are devoted to building better athletes. Please take a moment to share the articles on social media, engage the authors with questions and comments below, and link to articles when appropriate if you have a blog or participate on forums of related topics. — SF

[mashshare]

Nate Brookreson was named Director of Strength & Conditioning for Olympic Sports at NC State in June 2015. His primary training duties are with women’s basketball, swimming, and diving, and men and women’s golf, while supervising the implementation of training for over 500 student-athletes.

Nate Brookreson was named Director of Strength & Conditioning for Olympic Sports at NC State in June 2015. His primary training duties are with women’s basketball, swimming, and diving, and men and women’s golf, while supervising the implementation of training for over 500 student-athletes.

Prior to NC State, Brookreson was the Director of Athletic Performance for Olympic Sports at the University of Memphis from August 2013 until June 2015. While at Memphis, Brookreson worked primarily with men and women’s soccer, men and women’s golf, and track & field sprinters, while supervising training for the Olympic sports department. Before heading to Memphis, Brookreson was the Director of Athletic Performance at Eastern Washington University from October 2010 until August 2013, working primarily with football and volleyball while supervising the training of all EWU student-athletes. He also served as an assistant at the same institution from August 2008 until his appointment to director. Additionally, he served as a student strength and conditioning coach at the University of Georgia, assisting with baseball, softball, swimming and diving, and women’s tennis.

A native of Lacey, Washington, Brookreson earned his bachelor’s degree from Central Washington University and master’s degree from Eastern Washington University in exercise science. He is certified through the National Strength and Conditioning Association (CSCS) and the Collegiate Strength and Conditioning Coaches Association (SCCC).

Nate is married to Kelsey Brookreson and has two sons, Blaise and Brock.

Leave the first comment

You must be logged in to post a comment.